HeartRateNet Model Card

Model Overview

The model described in this card is a non-invasive heart rate estimation network, which aims to estimate heart rates from RGB facial videos.

Model Architecture

This is a two branch model with an attention mechanism that takes in a motion map and an appearance map both derived from RGB face videos. The motion maps are two consecutive (current and previous) frames' with a face region of interest (ROI) difference. The appearance map is the current frame obtained from the camera with the same ROI as the motion map. The appearance map is primary used as the attention mechanism which allows the model to focus on the more important features extracted from the model.

For more information on motion map, appearance map and attention mechanisms, how they are applied in this model, and the benefits on using attention mechanisms please see DeepPhys: Video-Based Physiological Measurement Using Convolutional Attention Networks.

Training Algorithm

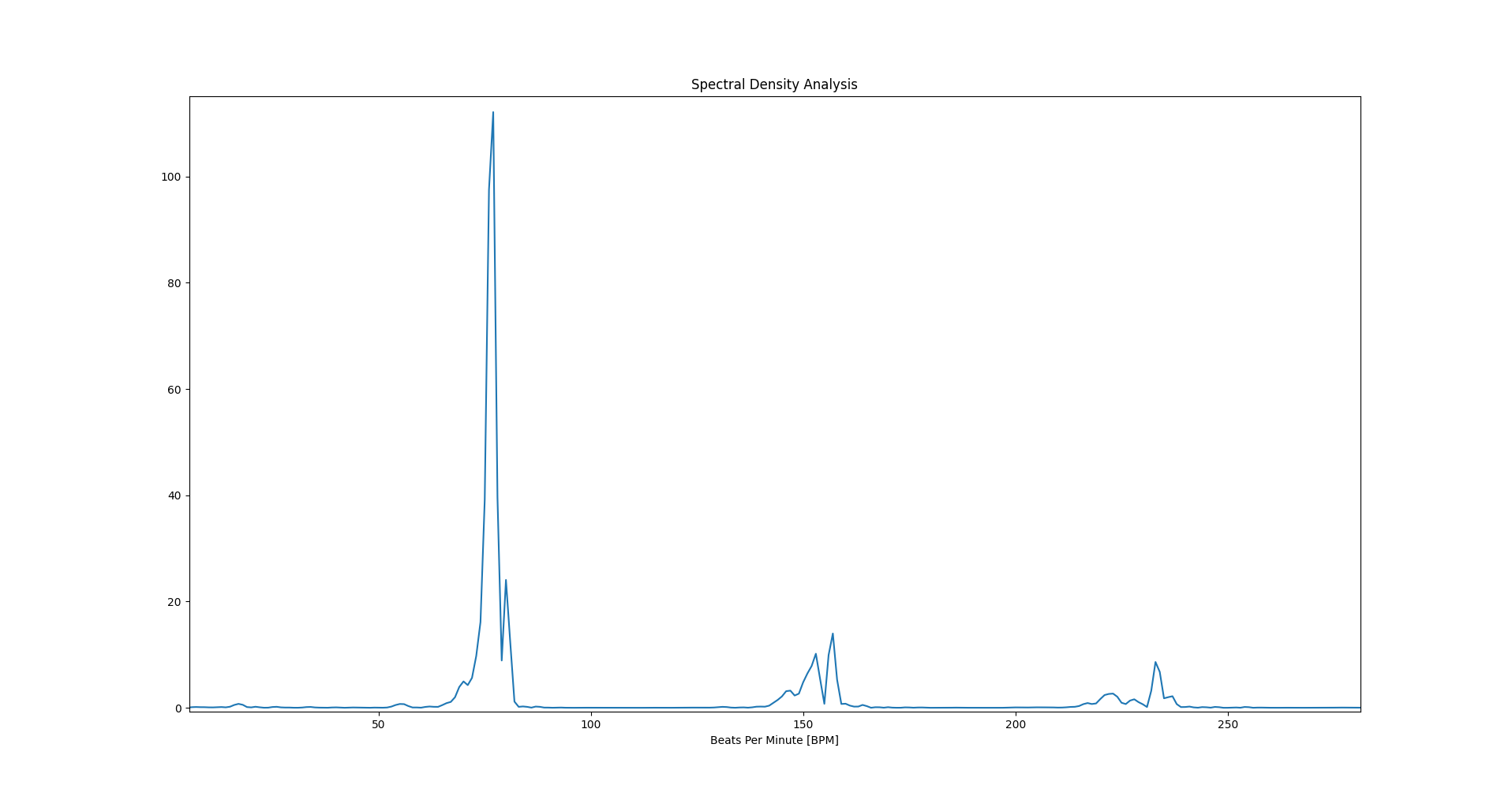

This model was trained using the HeartRateNet entrypoint in TAO. The training algorithm optimizes the network to minimize the mean squared error (MSE) between a ground truth photoplethysmogram (PPG) signal and a predicted photopleythysmogram signal. Please refer to the DeepPhys paper for the model architecture. The output from the model is the predicted PPG signal. The heartrate is extracted from the predicted PPG signal (model output) by first applying a 6th-order Butterworth filter. The lower and upper frequencies used were 40 BPM and 240 BPM, respectively. The filtering removes any unwanted noise outside the target heart rate BPM range. The SNR was calculated in the frequency domain as the ratio between the energy around the first two harmonics (0.2 Hz frequency bins around the ground truth heart rate) and remaining frequencies within a range of [0.7 4] Hz.

Training Data

HeartRateNet model was trained on a proprietary dataset with over 30000 raw images. The training dataset consists of various skin tones, heart rates, and exercising / no exercising cases. Most of the data was collected from low cost webcams built into laptops or USB webcams such as the Logitech C270 HD Webcam. The average distance from the webcam is approximately 3 feet and the field of view contains the target face to estimate the heart rate. The ground truth label was collected using the following pulse oximeters: CMS50d.

Data Format

Training

The data format must be in the following format.

/Subject_001

/images

0000.bmp

0001.bmp

0002.bmp

...

...

...

N.bmp

ground_truth.csv

image_timestamps.csv

.

.

.

/Subject_N

/images

0000.bmp

0001.bmp

0002.bmp

...

...

...

M.bmp

ground_truth.csv

image_timestamps.csv

haarcascade_frontalface_default.xml

Where N does not need to be the same as M.

The format for ground_truth.csv (only shows 3 samples) is as follows:

| Time | PulseWaveform |

| 1532558778.249175 | 72 |

| 1532558778.265609 | 70 |

| 1532558778.282607 | 66 |

| The format for image_timestamps.csv (only shows 3 frames) is as follows: |

| ID | Time |

| 0 | 1532558778.634143 |

| 1 | 1532558778.666368 |

| 2 | 1532558778.70147 |

The haarcascade_frontalface_default.xml can be downloaded from https://github.com/opencv/opencv/tree/master/data/haarcascades and is needed to preprocess the data for training.

Inference

To ensure the model works as expected, it is suggested that the inference data is face cropped to the required dimensions, there is at least 10 seconds of video, and only mild head movement is allowed.

The expected data format is given as

/<subject_name_1>

/images

0000.bmp

0001.bmp

0002.bmp

...

...

...

N.bmp

...

/<subject_name_2>

/images

0000.bmp

0001.bmp

0002.bmp

...

...

...

M.bmp

Performance

Evaluation Data

Dataset

The inference performance of HeartRateNet model was measured against 33346 raw bitmap images taken at various environments. The frames are captured at 640x480 and at an average 30 frames per second. The face ROI is then obtained from the 640x480 images and then resized down to either 36x36x3 or 72x72x3 while maintaining the aspect ratios to prevent any scaling artifacts.

Methodology and KPI

The key performance indicator is Mean Absolute Error (MAE) which is the absolute heartrate error in beat per minute (bpm) compared with groundtruth heartrate. MAE of our pretrained model is 1.4 bpm.

Real-time Inference Performance

The inference uses FP16 precision. The inference performance runs with trtexec on Jetson Nano, AGX Xavier, Xavier NX and NVIDIA T4 GPU. The Jetson devices run at Max-N configuration for maximum system performance. The end-to-end performance with streaming video data might slightly vary depending on use cases of applications.

| Device | Precision | Batch_size | FPS |

|---|---|---|---|

| Nano | FP16 | 1 | 380 |

| NX | FP16 | 1 | 1492 |

| Xavier | FP16 | 1 | 2398 |

| T4 | FP16 | 1 | 6226 |

How to use this model

This model needs to be used with NVIDIA Hardware and Software. For Hardware, the model can run on any NVIDIA GPU including NVIDIA Jetson devices. This model can only be used with Train Adapt Optimize (TAO) Toolkit, DeepStream 6.0 or TensorRT. Primary use case intended for this model is estimating heart rates from RGB face videos.

There are two flavors of the model:

- trainable

- deployable

The trainable model is intended for training using TAO Toolkit and the user's own dataset. This can provide high fidelity models that are adapted to the use case. The Jupyter notebook available as a part of TAO container can be used to re-train.

The deployable model is intended for efficient deployment on the edge using DeepStream or TensorRT.

The trainable and deployable models are encrypted and will only operate with the following key:

- Model load key:

nvidia_tlt

Please make sure to use this as the key for all TAO commands that require a model load key.

Input

Image of face crop with dimension 3x72x72, input crop size and data format (channels first or last) is configurable

Output

Estimated heart rate in beats per minute.

Instructions to use the model with TAO

In order to use these models as pretrained weights for transfer learning, please use the snippet below as a template for the model_config component of the experiment spec file to train a HeartRateNet model. For more information on experiment spec file, please refer to the TAO Toolkit User Guide.

model_config {

input_size: 72

data_format: 'channels_first'

conv_dropout_rate: 0.20

fully_connected_dropout_rate: 0.5

use_batch_norm: False

model_type: 'HRNet_relase'

}

Instructions to deploy this model with DeepStream

To create the entire end-to-end video analytic application, deploy this model with DeepStream.

Limitations

Occluded Faces

NVIDIA HeartRateNet model was trained on faces that have not been occluded. The model may not be able to give accuracte predictions when the face is occluded.

Dark-lighting, Monochrome or Infrared Camera Images

The HeartRateNet model was trained on RGB images in good lighting conditions. Therefore, images captured in dark lightning conditions or any light source other than RGB may not provide good heart rate estimations.

Head Motion

Although HeartRateNet model was trained with head motion, any extreme motion may result in poor performance.

Compressed Videos

The current HeartRateNet model was trained on raw bitmap images without any compression. Compressed videos may result in poor performance due to compression artifacts.

Model versions:

- trainable_v2.0 - Pre-trained model that is intended for training.

- deployabale_v2.0 - Deployment models that is intended to run on the inference pipeline.

References

Citations

- Chen, W., McDuff, D.: DeepPhys: Video-Based Physiological Measurement Using Convolutional Attention Networks. In: ECCV (2018)

Using TAO Pre-trained Models

- Get TAO Container

- Get other Purpose-built models from NGC model registry:

Technical blogs

- Read the 2 part blog on training and optimizing 2D body pose estimation model with TAO - Part 1 | Part 2

- Read the technical tutorial on how PeopleNet model can be trained with custom data using Transfer Learning Toolkit

Suggested reading

- More information on about TAO Toolkit and pre-trained models can be found at the NVIDIA Developer Zone

- TAO documentation

- Read the TAO getting Started guide and release notes.

- If you have any questions or feedback, please refer to the discussions on TAO Toolkit Developer Forums

- Deploy your models for video analytics application using DeepStream. Learn more about DeepStream SDK

- Deploy your models in Riva for ConvAI use case.

License

License to use these models is covered by the Model EULA. By downloading the unpruned or pruned version of the model, you accept the terms and conditions of these licenses.

Ethical Considerations

NVIDIA HeartRateNet model predicts heart rates. However, no additional information such as race, gender, and skin type about the faces is inferred.

NVIDIA’s platforms and application frameworks enable developers to build a wide array of AI applications. Consider potential algorithmic bias when choosing or creating the models being deployed. Work with the model’s developer to ensure that it meets the requirements for the relevant industry and use case; that the necessary instruction and documentation are provided to understand error rates, confidence intervals, and results; and that the model is being used under the conditions and in the manner intended.