Amazon SageMaker and NVIDIA GPU Cloud (NGC) Examples

This repository is a collection of notebooks that will show you how to use NGC containers and models withing Amazon SageMaker. This repository includes the following examples:

- Using an NGC PyTorch container to Fine-tune a BERT model

- Using an NGC pretrained BERT model for Question-Answering in PyTorch

- Deploy an NGC SSD model for PyTorch on SageMaker

- Compile a PyTorch model from NGC to SageMaker Neo and deploy onto SageMaker

- Train and Deploy a TensorFlow BERT model using the NGC container/model to TensorRT

- Using an NGC TensorFlow container on SageMaker

- Deploy an NGC model for TensorFlow on SageMaker

Viewing An Example

To take a look at any notebook, follow these steps:

- Navigate to the File Browser tab

- Select the version you'd like to see

- Under the actions menu (three dots) for the .ipynb file select "View Jupyter"

- There you have it! You can read through one of the awesome AWS SageMaker examples and copy code samples without ever leaving NGC.

NVIDIA NGC

NGC - NVIDIA’s app catalog for Deep Learning, High Performance Computing and Visualisation gives you easy access to enterprise-grade GPU-optimized containers, along with pretrained models, resources, Helm charts, and software development kits (SDKs) that can be deployed at scale. NGC accelerates your end-to-end AI development and deployment pipeline, empowering you to build reliable and scalable applications.

With more than 80 containers and over a 100 models, NGC is quickly becoming the de facto tool for data scientists and application developers for their AI software needs.

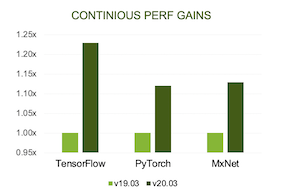

- Containers - The deep learning frameworks and HPC containers from NGC are GPU-optimized and tested on NVIDIA GPUs for scale and performance. With a one-click operation, you can easily pull, scale, and run containers in your environment. The deep learning containers from NGC are developed on a monthly basis, packed with latest features that help extract the maximum performance from your exisiting GPUs.

- Models - NGC provides pretrained models for applications such as: image classification, object detection, language translation, text to speech, recommender engines, sentiment analysis and many more. So if you’re looking to build on existing models to extend your capabilities or customise models with transfer learning to suit your bespoke use cases NGC has you covered. The results from MlPerf competioton shows how our models optimized for GPUs reach the solution in the fastest time possible.

Resources - NGC offers step-by-step instructions and scripts for creating deep learning models with code samples, making it easy to integrate the models into your applications. These examples come with sample performance and accuracy metrics so you can compare your results. Leveraging our expert guidance on building DL models for image classification, language translation, text-to-speech and more, you can quickly build performance-optimized models by easily adjusting the hyperparameters.

Helm Charts - NGC offers a range of Helm Charts that make it easy to deploy powerful NVIDIA and third-party software which your IT teams can install on Kubernetes clusters to give users faster access to run their workloads.

NGC carries lots of great content from NVIDIA and our partners that can be used for your AI applications. But if you’re after access to some exclusive applications, you can SIGN UP HERE.